Two New Digital

Techniques For The Transfiguration Of Speech Into

Musical Sounds1

Richard

Boulanger

Abstract

Speech

and music have been inextricably entwined from their very beginnings. In the Western musical tradition there have been such

manifestations as chant, recitative, Sprechstimme,

and text-sound. Each of these can be

seen as a translation of a limited portion of the total speech into the

musical vocabulary of the age - speech intonation into melodic formulae,

prosodic rhythm into restricted patterns and sequences. Two digital filtering techniques provide new means for

transforming, speech into music. Mathematically these techniques can be described as linear filtering operations.

Musically they can be understood as means of specifying and controlling

resonances. The first, the Karplus-Strong plucked-string

algorithm, loosely models the acoustic behavior of a plucked string. The second, fast-convolution, is a procedure for

obtaining the product of the two spectra. The contribution of this

research lies both in the novel musical interpretation of these techniques and in the elucidation of their musical

use. A wide variety of musical applications are demonstrated through

taped sound examples. These include specifiable echo and reverberation,

generalized cross-synthesis, and dynamically controllable and tunable string resonance, all utilizing natural speech. Some of

these resemble prior musical applications of speech while others

represent entirely new possibilities.

A large body of research has addressed the application

of digital signal processing techniques to the

problems and technical concerns of speech communication2, concerns

ranging from the digital transmission and storage of speech, to its recognition,

enhancement, and synthesis. Applications have

included telecommunication, automated information

services, reading and writing machines, voice communication with computers, and

voice-activated security systems, to name a few.3 Speech has been a continuing

issue in music as well. From the time of the

Greeks to the present, innovative compositional applications of speech

have defined and redefined the boundaries of the field. Concerns have included

the intelligibility of the sung/spoken word and the control of its pitch,

onset, duration, amplitude, and timbre. Applications have included recitative, Sprechstirnrne, chant, and opera, to name a few3. Each of these innovative

compositional applications can be seen in part as a

response to technological advances, both advances introducing new tools

and advances offering new insights into the behavior and utility of existing

tools. The most. recent and potentially

most powerful conceptual and practical tools which have become available to the composer are those of

digital signal processing. While the compositional utility of signal

processing techniques is but a peripheral concern to the speech processing community, techniques such as the phase

vocoder and linear predictive coding offer the

composer tools to readdress fundamental concerns regarding speech as music. In this

paper, I demonstrate the musical utility of two recent digital techniques, the Karplus-Strong plucked-string algorithm, and fast

convolution, and show how they can be used to transfigure can normal

speech into musical sound, creating effects which are both new and

powerful.

Of

Speech and Music

The precise beginnings of music

are unknown, though several theories place its origins in either exaggerated speech or in the ecstatic, percussive,

dance-oriented expression of biological and/or work rhythms.4

An interesting alternative is proposed by Leonard Bernstein in The Unanswered Question: Six

Talks at Harvard.5 He

suggests that song is the root of speech

communication and not the other way around. In support of this claim he cites the fact that vocalizations of non-human

primates consist almost entirely of changes in pitch and duration, and that speech articulations appear to be

characteristically human. According

to Pribram, such observations suggest that "at

the phonological level speech and music begin in phylogeny and ontogeny

with a common expressive mode."6

Whether or not music and speech

evolved from a common expressive mode, it is generally considered that speech

is a special kind of auditory stimulus, and that speech stimuli are perceived and processed in a different way from nonspeech stimuli. In support of this view Brian C.J. Moore

points to evidence derived from studies in categorical perception, which indicate that speech sounds can be

discriminated only when they are identified as being linguistically

different; from studies of cerebral asymmetry, which indicate that certain

parts of the brain are specialized for dealing with speech; and from the speechnonspeech dichotomy, in which speechlike

sounds are either perceived as speech or as something completely nonlinguistic.7

A number

of innovative historical manifestations of speech as music exist. These are

traceable as far back as the Greeks. The issue is not whether the solutions to

the problems associated with speech as music resulted from

intuitively tapping the common root of the two modalities (speech and

music), or whether they merely exploited the speech/non-speech

dichotomy and thereby demonstrate that speech sounds, when presented in non-linguistic constructions, are automatically

transformed into speech-music (i.e., non-speech). Those are issues for

musicological and psychological study and debate.

Valid historical solutions do

exist, and they can often be equated with musical innovation. As such these

innovative uses of sound continue to redefine the limits of musical possibility.

The following historical review highlights two innovative musical solutions,

solutions which resulted from the application of new technology to the fundamental problems of speech and

music. Granted, the history of music will provide the final say - the ultimate validation of

all theoretical propositions, still there is no denying that speech is transfigured into musical sound in

pieces such as Reich's Come Out, Eno's My

Life in the Bush of Ghosts, Berio's Thema (Omaggio a Joyce), Reynolds' Voicespace,

and Lansky's Six Fantasies on a Poem by Thomas Campion.

Two

Current Digital Techniques

Until recently, the technology did not exist by which the

acoustical components of speech could be

extracted for the purpose of systematic compositional exploration. Now that it

does, the potential for musical advancement rests on the innovative

applications of it.

Linear Predictive Coding and Its Musical Use in Dodge's Speechsongs

Charles Dodge is most notable for

his pioneering work in the musical applications of computer speech synthesis.

Using the computer and an analysis-based subtractive synthecic technique known as linear-predictive coding (LPC) Dodge

composes a music of true Speech

melodies - speechsongs. With LPC, any word or

phrase which has been analyzed can

be resynthesized directly. However, the true musical

utility of LPC lies in the fact that

the analysis operation effectively -decouples- the

parameters of pitch, time, and spectrum. Thus

the composer can arbitrarily and independently control these three fundamental

components of the analyzed speech in the same way that he would traditionally Compose and orchestrate the notes and rhythms of

a traditional piece of instrumental or vocal music.

For the first time we have in

electronic music the possibility of making something extremely concrete - that is, English communication

of words - which can be

extended

directly into abstract electronic sound. In the past, there's been quite an

abutment between the two. On the one hand, you've had

music such such as Milton Babbitt's, with its

sophisticated differentiations of timbre, pitch, and time; and on the other

hand, you've had musique concrete, consisting

of the reprocessings of

sounds made with microphones. The synthetic speech

process combines the two in

quite an

interesting way. It bridges that gap between recorded sound and synthesized

sound.8

Linear predictive coding is a method

of designing a filter to best approximate the spectrum of a given signal. Although the approximation

gives as a result a filter valid over a limited

time, it is often used to approximate time-variant waveforms by computing a

filter at certain intervals in time. This gives a series of filters, each one

of which best approximates the signal in its

neighborhood.9 In his survey paper on the

signal processing aspects of computer music, James A. Moorer describes the steps involved in the Dodge

"speechsong- analysis to synthesis process. It begins with reciting

the voice material into a microphone

which is connected to an analog-to-digital converter on the computer. The speech is then analyzed and the analysis produces

as intermediate data the voicing information (whether a periodic or

noise excitation is to be used), the pitch of the speech (if it is voiced), and

the filter coefficients, all at regularly spaced points in time. This data can be used to resynthesize

the original utterance directly, or it can be modified. For example, the utterance can be re-synthesized

at different rates by changing the parameters (pitch, filter coefficients, voiced/unvoiced decision) faster or

slower than they occur in the original utterance. Or the pitch can be changed without changing

the temporal succession simply by multiplying all

the pitches by a fixed scale factor.10 Normally if one takes recorded

speech and merely speeds up or slows down the waveform itself, the fundamental

frequency of the speech is changed as well as the tone quality. However, by

first analyzing the speech, one can change

the timing or the fundamental of the speech independently.

It is also

possible, using the LPC analysis/synthesis technique, to modify speech in ways which would be impossible

for a real speaker to do. Typically, white noise is used as the source function

for the resynthesis of aperiodic

speech sounds and a wide-band periodic

signal such as a pulse train is used as the source function for the resynthesis of the voiced portions of speech.

However, other driving functions might be employed. For example one might substitute a more complex signal such as the recording

of an orchestra for the pulse train and thereby achieve "talking

orchestra" effects. Dodge often mixes varying degrees of noise in with the

pulse train to create a sung/whispered speech (i.e., Sprechstimme).

Both the

"talking orchestra'. and Dodge's

synthetic Sprechstirnme effects are examples

of what is called "cross-synthesis." One often refers to

cross-synthesis to characterize the production of a sound that compounds

certain aspects of sound A and other aspects of sound B.11 Thus,

since the physical model of speech which is manifest in the LPC technique separates speech into two, fairly independent

components, the quasi-periodic excitation

of the vocal cords and the vocal-tract response (i.e., • formant structure) which

is varied through articulation, the possibility of independently

controlling either of these components of speech- by "certain

aspects" of other sounds only requires substitution. The results of these changes are

potentially musically significant.

The Phase Vocoder - Moorer's

Lions are Growing

Lions are Growing (1978) is a "study"

by Andy Moorer which uses computer treatment of the

sounds of the human voice. The clarity, range, and fidelity of the synthetic speech in it represent a significant

advance over that in Dodge's speechsong work. Actually,

the analysis-synthesis technique used to create Lions are Growing is not

LPC. Rather it uses the phase vocoder which,

particularly because of its potentially very

high-fidelity output, has a far greater musical potential. Of the piece Moorer writes:

This

study uses as text a poem by Richard Brautigan

entitled "Lions are Growing." All the sounds

are based on two readings of the poem by Charles Shere. These two readings were fed into the computer, then decomposed and analyzed by the computer into pitch, format

structure, and other parameters. The

composition of the piece, then, consisted of resynthesizing

the utterances, applying various transformations to the pitch, timing, and

sometimes formant structures. The resulting transformed sounds were

mixed together to form thick textures with as many as 30 voices sounding

simultaneously. Computer-generated

artificial reverberation was then applied and the sounds were

distributed between the two speakers. All but a very few of the sounds in this

study then are entirely computer synthetic, based on original human utterances.

In the

phase vocoder, an input signal is modeled as a sum of

sine waves and the parameters to be determined by analysis are the

time-varying amplitudes and frequencies of each sine wave. Since these sine

waves are not required to be harmonically related, this model is appropriate for a wide

variety of musical signals. By itself, the phase vocoder

can perform very high-fidelity time-scale

modification or pitch transposition of a wide range of sounds. In

conjunction with a standard software synthesis program, the phase vocoder can provide the composer with arbitrary control of

individual harmonics.12

Historically,

the phase vocoder comes from a long line of

voice coding techniques which

were developed primarily for reducing the bandwidth needed for satisfactory

transmission of speech over phone lines. The term vocoder is merely a contraction of the words "voice coder... Of the wide variety of

voice coding techniques the phase vocoder, so named

because it preserves the time varying "phase" of the input signal as

well as its frequency

and magnitude information, was first described in a 1966 article by Flanagan

and widen.

However,

only in the past ten years, primarily due to improvements in the speed and efficiency of implementations of the

technique, has the phase vocoder become so popular

and well understood, at least in signal processing circles.13

Although

undeniably a beautiful piece, Moorer's Lions are

Growing does not represent

the "next" innovative computer-based solution to the problem of

speech as music. Viewed from the historical

perspective, it is clearly a conventionalization of the Dodge innovation. As such, it

merely demonstrates, in a charming and humorous fashion, the degree to which this new analysis-synthesis

technique (the phase vocoder) can improve speechsong" fidelity. Obviously

high-fidelity is an important factor in music, but it is not necessarily a

significant or innovative one.

An

innovative speech-music application of the phase vocoder

has recently drawn the attention of composers and researchers at the

Computer Audio Research Laboratory at the

University of California in San Diego. It results from the time-expansion of

speech by extremely large factors (i.e., between 20 and 200). In these cases,

the speech is no longer discernible as such, but rather, the underlying structure

of the sound is brought to the perceptual

surface. This process of extreme time-expansion "magnifies," in a

sense, the -microevolution- of the spectral

components of speech, and effectively transforms spoken text into

musical texture. This application employs the phase vocoder

as a "sonic microscope" to reveal

the "symphony" underlying even the shortest of utterances. A music based on that which is revealed by these exploded

views could prove quite significant and the phase vocoder represents a powerful new

tool for music whose significance has yet to be fully recognized or

musically demonstrated.

Two

New Digital Techniques

The

Karplus-Strong Plucked-String Algorithm as a Tunable

Resonator

Techniques for the computer

control of pitch have an obvious significance for music. In particular, linear

prediction and phase vocoding were cited as two

recent computer-based methods for infusing speech with an arbitrarily

specifiable pitch. But these techniques demand both considerable computational

resources and considerable mathematical sophistication on the part of the program. In this

chapter an attractive new alternative to these

techniques is presented.

The basis

for this technique is the Karplus-Strong

plucked-string algorithm.14 The algorithm

itself consists of a recirculating delay line which

represents the string, a noise burst which represents the "pluck," and a

low-pass filter which simulates damping in the system_

Applications of this algorithm to date have focused primarily on extending its ability

to imitate subtle acoustic features and performance techniques associated with

real strings and string instruments. In contrast, this chapter investigates the

musical utility of the

system as a tunable resonator.

The

Ideal String

Any

dynamic system has two types of response - a forced response, and a natural response.

The natural response is that which continues after all excitation is removed.

In the case of a violin string, the natural response is that which follows

the pluck. The forced response, on the other hand, is that which occurs

in the presence of some form of excitation.

In the case of the violin string, forced response occurs when the string is bowed. The overall response of the system is

equal to the sum of the forced response and the natural response.

An ideal string15 is

a closed physical system consisting of an infinitely flexible wire, fixed at both ends, by anchors of infinite

mass, and characterized by three independent variables: mass, tension,

and length. When its vibration is excited by a one-time supply of energy, such as a pluck, two wave pulses propagate to the left

and right of the point of excitation, reach the anchors (which act as

nodes), and reflect back in reverse phase. A short time after the input has ceased, a series of "standing waves" results. The

specific point of excitation determines which standing waves are present.

Standing waves or "modes

of vibration" represent the only possible stable forms of vibration in a

system. In the case of the ideal string, only those standing waves which "fit" an integral number of times between the

anchor points are possible, because destructive interference would

effectively cancel out all others. Standing waves thus have frequencies that are integral multiples of a

fundamental frequency determined by the string's mass, tension, and

length. For both the ideal and the real string, altering any of these three

parameters alters the fundamental frequency.

An

important distinction between ideal and real strings concerns the dissipation

of energy. In the ideal string, those vibrational

modes which have not been canceled out will vibrate

indefinitely. But in a real string, all the wave energy gradually dissipates

via internal

friction (because a real string is not infinitely flexible and infinitely thin)

and through the anchors (which, in the case of a real string, are not of

infinite mass).

Another

notable distinction concerns the property of stiffness in real strings. Due to

stiffness, the frequencies of higher vibrational

modes of real strings are not exact integer multiples

of the frequency of the fundamental mode. Therefore stiffness can significantly

affect the

timbre of real strings.

The

Karplus-Strong Plucked-String Algorithm

The

Karplus-Strong plucked-string algorithm is a

computational model of a vibrating string based on physical resonating. In 1978

Alex Strong discovered a highly efficient

means of generating timbres similar to "slowly decaying plucked

strings" by averaging successive samples in a pre-loaded wave table. Behind Karplus and

Strong's research was the effort to develop

an algorithm which would produce "rich and natural" sounding timbres, yet which would be

inexpensively implementable in both hardware and

software on a personal computer. Since their algorithm merely adds and shifts

samples, it meets the above

criteria. But the limitations of small computer systems, particularly the absence of high-speed multiplication, impose

serious constraints on the algorithm's general musical utility. Still,

the Karplus-Strong plucked-string algorithm does

allow for a limited parametric control of pitch, amplitude, and decay-time.

Frequency resolution depends

largely on the length of the wave table and the

sampling-rate. A small wave table results in wide

spaces between the available frequencies. A larger one, while offering better

frequency resolution, can "adversely" affect the decay times. In either case. since the length of

the wave table must be an integer, not all frequencies

are available.

According

to the natural behavior of the system, the output amplitude is directly related to the amplitude of the

noise burst. As a means of controlling the output level Karplus and Strong recommend loading the wave table

with different functions or employing

other schemes for random generation. They further suggest that the pluck itself

might be eliminated by simultaneously starting two out of phase copies

and letting them drift apart.

Like

frequency, the decay-time resolution is dependent on the table-length. Higher

pitches will naturally have shorter decay-times than lower pitches. This is due

to • the

fact that higher frequencies are more attenuated by a two-point average, and

that a higher pitch means more trips through the attenuating loop in a given

time period. To stretch the decay-time of

higher pitches, Karplus and Strong suggest filling

the wave table with more copies of the input

function; to shorten the decay-time of lower pitches, they suggest using

alternate averaging schemes (such as a 1-2-1 recurrence).

The

Jaffe-Smith Extensions

An

implementation of the plucked-string algorithm on a high-powered computer by Jaffe and Smith allows

several modifications and extensions which substantially increase its musical utility.16 In particular,

Jaffe and Smith address the problems associated with the arbitrary

specification of frequency, amplitude and duration. They consider the Karplus-Strong plucked-string algorithm to be an

instrument-simulation algorithm which efficiently implements certain

kinds of filters, and they point out that, from the perspective of digital

filter theory, the system can be viewed as a delay line with no inputs.

To compensate for the

difference between the table-length-based frequency and the desired frequency, Jaffe and Smith suggest the insertion of a

first-order all-pass filter. Since an all-pass has a

constant amplitude response, it can contribute the necessary small delays to the feedback loop without altering the the loop gain (i.e., the phase delay can be selected so as to tune to the desired frequency

and thereby adjust the pitch without altering the timbre).

As a means of controlling the

output level, Jaffe and Smith recommend that a one-pole low-pass filter be applied to the

"pluck." This alters the spectral bandwidth of the noise; with

less high frequency energy in the table, the audible effect is of the string

being plucked lightly. Another possible means is to simply turn the output on

late, after some of the high frequency energy has died away.

To

lengthen the decay-time of higher pitches, Jaffe and Smith recommend weighting the two-point average. To

shorten the decay of lower pitches, they suggest adding a dampening factor.

Since the damping factor affects all harmonics equally, the relative decay

rates remain unchanged.

As well as their solutions to

some of the algorithm's fundamental limitations, Jaffe and Smith recommend the inclusion of several other filters as a

means to simulate subtle acoustic and performance features of real plucked

strings. For example, they suggest

that a comb filter can be used to effectively simulate the point of excitation

by imposing periodic nulls on the spectrum. Another filter can be

incorporated to simulate the difference between "up" and

"down" picking. They also note that an allpass

filter can be used to stretch the

frequencies of the upper harmonics out of exact integer multiples with the

fundamental and thereby effectively simulate varying degrees of string

stiffness.

In the cmusic software synthesis language the fltdelay

unit generator is an implementation

by Mark Dolson of the Jaffe-Smith extensions with

several additions. Most significant among

these is that he allows an arbitrary input signal to be substituted for the initial noise input - an extension originally

suggested by Karplus and Strong, but not otherwise

pursued. This feature expands the scope of the model from one which simulates a

limited class of plucked-string

timbres to a general-purpose tunable resonance system.

A

Conventional Application: The Plucked-String

Sound

Example III.1A17 demonstrates the general musical utility of the jitdell unit

generator in its typical application - as a means of producing plucked-string

timbres. The

main feature of this example is that it clearly reveals a •

"natural-sounding" timbre which is

indeed "string-like" and remains so throughout a wide range of

pitches and under a wide variety of "performance situations."

Furthermore, although the sound is clearly string-like, it does not

particularly sound like any identifiable string or stringed instrument.

The first and second sections

display a change in timbre associated with pitch height. As with real stringed

instruments, higher tones sound more brittle than lower ones. Section three demonstrates the added richness which results from

multiple "unison" strings as found,

for example, in the design of most conventional keyboard instruments. The fourth section demonstrates fltdelay's ability to

simulate a variety of string types, (e.g., nylon, metal, metal-wound). These different

"strings" result from the various parameter

settings of place and stiff. Parametric control of

string stiffness is one example of

the unique features of this computer model which have no real world parallel.

The fifth section demonstrates a technique

which is possible on some stringed instruments but not on all - glissando.

Without the physical limitations of real strings, glissandi of any contour,

range, and speed are now possible.

By the

very nature of this example, the musical utility of the algorithm is revealed,

and its ability to serve in numerous conventional applications is obvious. A more comprehensive musical

display of the Karplus-Strong plucked-string

algorithm's extensive and diverse sound repertoire can be found in David

Jaffe's four-channel tape composition Silicon Valley Breakdown.18

When

writing for tape and live instruments, electro-acoustic composers have often contrasted, complimented,

and/or challenged live performers with synthetic shadows or counterparts

of their acoustic instruments. In these situations the tape often plays the

part of the acoustic instrument's alter-ego. David Jaffe's May All Your

Children Be Acrobats (1981) is such an example. In the composition a

computer-generated tape (which features Karplus-Strong plucked-string generated sounds) accompanies

a female voice and eight real plucked-sting instruments (guitars). This

piece, and others which have followed,

demonstrate that in its conventional use, the Karplus-Strong

plucked-string algorithm provides a new, versatile, and flexible tool

for the continuation of this frequent musical practice.

A

New Application: The String-Resonator

The

utility of the Karplus-Strong algorithm in producing

a wide variety of

natural sounding plucked-string timbres has been established. What is far less

appreciated, though, is that the Karplus-Strong

plucked-string algorithm can also be used as a resonator.

The basic property of any

resonator is that it can only enhance energy which is already present in the input at the resonant frequencies. As a

consequence, the sound of any

resonated source can be thought of conceptually as the dynamic spectral

intersection of the source and resonator. Mathematically, the spectrum of

the resonated source is the product

of the two spectra. This product is a spectral intersection in the sense that

it is nonzero only at frequencies at which both the input and resonator

spectra are non-zero. The intersection

is dynamic in that the spectrum of the source is dynamically changing in time.

This

concept is particularly relevant for a speech input because speech consists of

both "voiced" and "un-voiced" segments, and because the

voiced segments typically have a

constantly varying pitch. This means that the pitch of the voiced segments will

not usually match the pitch of the resonator, and the

spectral intersection will be null. However, the unvoiced speech will always have energy in common with the

resonator, regardless of the

resonator's pitch. Thus, it is actually the unvoiced segments of speech that

are infused with pitch. Furthermore,

what is actually being said becomes quite important.

For instance, a resonator will have little effect if its input is simply

a sequence of vowels.

This is in contrast to the

techniques of Chapter II (linear prediction and phase vocoder)

in which the pitch of the voiced segments is controlled. In the case of linear prediction, pitch control is achieved by setting

an excitation signal to the desired pitch; in the case of the phase vocoder, the voiced pitch is simply transposed.

When using

the Karplus-Strong plucked-string algorithm as a

resonator of speech, the resulting percept is very much like the sound of

someone shouting into a piano with a single key depressed. The primary character of the model

(i.e., its string-like timbre) is also the

most salient characteristic of any speech which is resonated with it. This

percept is so strong that it no longer makes sense to think of

the model as a plucked-string or as jltdelay. Rather, by virtue of this

unique application and the general timbral character

of the resulting sound, the algorithm can be more appropriately understood as a

tunable "string-resonator."

An important distinction

between using a real string as a resonator and the Karplus-Strong string-resonator has to do with coupling. In the case of the

string-resonator the coupling is much

better than in real life; thus, far more of the voice energy is delivered

to the string.

For

musical applications, another important consideration is the relation between

the pitch of the voice and the pitch of the resonator. When the two are close

in pitch, they tend to present a more fused percept. As the pitches

become more disparate, there is still a clear sense of pitch, but the

resulting sound is divided into two components with the resonated pitch above

and the speech below. This is illustrated in Sound Example III.2A which

demonstrates the behavior of the string-resonator over a four octave frequency

range.

The example has been divided

into three sections based on the degree of fusion between spoken text and resonator. In the first section, the voice and

the string-resonator are heard as a relatively fused percept. The

apparent pitch is that to which the string-resonator

is tuned. In the second section, as the pitches rise, the two components - voice

and tuned resonance, become

progressively more distinct, and become parallel streams by Fs(4). A

feature of the third section is the bright ringing "sparkle"

associated with the noise components of the spoken text.

By virtue

of the string-resonator's ability to effectively infuse natural speech with pitch, a number of new musical

applications become possible. Sound Example III.2B demonstrates one such musical application which I call string-chanting.

The instrument is similar to the one above but for two additions:

parametric control of amplitude and parametric control of string stiffness.

These two additional controls allow for individual,

and variable setting of the balance and timbre of each string-resonated voice.

The score

divides into two sections. In the first, four voices enter independently, pseudo-canonically,

one approximately every 1.5 seconds. In the second section, six voices enter

simultaneously and cut off together at the end of the single line of text which

is the input.

A

String-Resonator with Varying Stiffness

The Jaffe and Smith extensions

of the Karplus-Strong plucked-string algorithm also extend its utility as a string-resonator. In

particular, their addition of an all-pass filter to

simulate string stiffness provides control of the resulting timbre of the

string-resonated input and thereby increases its general utility.

To this

point, the character of string-resonated speech has been a predominantly metallic

timbre which has been completely harmonic. This

naturally leads to comparisons with the contemporary musical practice of shouting into

an open piano with various keys depressed (e.g., Crumb's Makrokosrnos).

Without the Jaffe and Smith addition to simulate the various degrees of

stiffness associated with real strings, this metallic timbre would always dominate the character of any

string-resonated input. However, by simply increasing the string-resonator's

stiffness

(i.e., stretching

the partials), the character of Sound Example III.3A is transformed from a dark

metallic timbre to a delicate "glasslike" one.

Control of

timbre musically significant, and various settings of stiff

correspond to different

string-resonated timbres. That the string-resonator's stiffness can be altered

parametrically further increases its utility, by allowing the note-level

control of subtle variations in spectral

shading. Thus, stiff can be seen as the string-resonator's general tone control.

A

String-Resonator with Varying Pitch

With the addition of a single

unit generator to the basic string-resonator instrument it is possible to alter

the frequency of the string as a function of time. This musically useful extension is made possible by the

design of the cmusic fltdelay

unit generator.

One of the

basic design features of fitdelay is that its

pitch argument accepts values via an input/output (i/o) block. An i/o block is like a patchcord. It holds the output of one unit generator in order to allow its use as the input of

another unit generator. In this case, the output for the dynamic control

of the string-resonator's pitch can come from cmusic's

trans unit generator.

The trans unit generator allows for the parametric

specification of the time, value, and exponential transition shape of a

function consisting of an arbitrary number of transitions. In

Sound Example III.4 a three point exponential transition is specified. The timing of the

function is fixed for all notes (i.e., 0, 1/3, 1) and the three frequency

values of the pitch

shift are specifiable parametrically.

Sound

Example III.4 divides into two sections. The first consists of six notes, each with

a unique pitch transition (i.e., up and down, up and up, etc.) and each featuring

a different

range and limit. None of the notes overlap.

The

second section is a musical application of pitch shifting

"string-resonators." All of the notes presented in the first section

are reused, and several additional notes are added. The duration of all

notes is doubled and many of them overlap.

It is

interesting to observe the degree of fusion under extreme pitch shifts such as these. In a number of cases,

the words themselves sound as if they have been stretched out of proportion.

The mutation of the spoken word "world" is an example of the type of

sounds which result from a recirculating delay whose pitch

(i.e., delay) is continuously shifted.

As regards the implementation

of the model, a relevant point is that the decay factor is set at the beginning of a note. This corresponds to setting the

appropriate damping in the

system whereby a note will turn off at the end of the duration set in p4. When the

pitch changes during the course of the note, the decay factor will remain

constant. Normally a low tone would require

a great deal of attenuation for it to have the same effective damping as a higher tone. Thus, since

the effective damping is not kept constant when pitches are shifted from

high to low, there is a noticeable shift in perceived sound quality, a -boominess- at the bottom end of a downward gliss.

The

ability to vary the pitch of the string-resonator during the course of the note

is another

extension of its musical utility. Given dynamic pitch control, one can easily infuse the speech with not only pitch, but with

musical vibrato, portamento, or glissando.

Within

the past ten years, a growing number of singers and composers have experimented

with the production and compositional specification of a wide range of extended

forms of vocal production.19 Example is a string-resonator emulation

of some of these musical practices (e.g., Roger Reynolds' Voicespace, Deborah Kavasch's

The Owl and the Pussycat, and

Arnold Schoenberg's Pierrot Lunaire). In this regard, this sound example demonstrates a feature of the

string model which has no real-world parallels. It is not possible to simultaneously vary the tuning of an

arbitrary number of resonators over such an wide pitch range and with such

accuracy except, perhaps, by demanding such from the vocal-tract resonant systems of a chorus of

extended-vocal-technique specialists such as Diamanda

Galas, or Philip Larson.20 Some of the extreme frequency shifts in

this specific musical example are not humanly possible.

String-Resonators

as Echo-Reverberators

The musical utility of the

string-resonator is not limited by the human capacity for pitch discrimination

(ca., 20Hz to 20kHz). In particular, when the

resonator is tuned to frequencies below

10Hz the mechanism of the model is revealed; repetitions of the input are

readily perceived as it recirculates through the

delay-line (i.e., "the string"). This extension of the string-resonator can be more

appropriately thought of as a "string-echo."

The

frequencies of the four notes in Sound Example III.5A range from 4Hz to 19Hz in 5Hz

steps. When tuning the resonator to such low frequencies the maximum length of the

delay-line must be altered from its default length of 1K-samples to 16K. This

is because the lowest fundamental frequency which can be generated by fltdelay is directly related to the length of the

delay-line by the equation:

F0 = R / L

where Fa is the

fundamental frequency, R is the sampling rate, and L is the length of the delay-line in samples. Thus with a 16K-sample maximum

delay and a 16KHz sample-rate, fltdelay can accurately

produce signals with fundamental frequencies as low as 1Hz.

The sound

of the first note, at 4Hz, is an echo of the input at a rate of 4 times per

second. The second note, at 9Hz, still has some sense of repetition associated

with it, but it also sounds as if someone is speaking into a large

metal cylinder. This is remarkable for a

frequency so low. The third note, at 14Hz, presents more of a flutter than an

echo, and the

fourth, at 19Hz, is pitched, but with a strong sense of roughness.

A point to note is that

string-echo via fltdelay is different from echo produced

via a recirculating comb filter. Fltdelay

has a frequency dependent loop gain whereas the recirculating comb filter does not. It is precisely this

frequency dependent loop gain which accounts for the characteristic

coloration of the model (i.e., its stringlike

timbre). This is because, in

fltdelay, as is the case in a real

string, high frequencies are more rapidly attenuated than low frequencies.

The technique of echo has long

been a staple of electro-acoustic composers, initially in the form of complex tape loop mechanisms (e.g., Oliveros' I of IV), later via analog delay circuits, and presently through the

proliferation of portable, programmable digital delay units. However, a

significant feature of string-echo not shared by any of the aforementioned devices is that of dynamic

repetition-rate control (i.e., pitch control) such as was shown in Sound

Example I11.4A.

In addition to the echo and

flutter effects which predominate at repetition rates below 20Hz, there is also another class of effects at the low end of

the audible pitch range. Sound Example III.5B, which illustrates these,

divides into two sections. The first is a sequential entry of four rising

pitches, and the second, a succession of two chords. This sound example

parallels the 111.2 series which also featured a rising series of string-resonated pitches followed by a multi-voice chord.

The only significant difference between the two examples is that III.5B

spans a lower pitch range (C0 to C2). The sound results

of the two examples, however, are not

parallel. In particular, when string-resonated chords are played in this low register, they are not

perceived as discrete harmonies at all. Rather, they give the impression of a highly colored reverberance,

a reverberance which is literable

tunable.

A strong sense of pitch

dominates each of the four notes in the first section of Sound Example III.5B. Although the degree of

fusion is clearly different for each note, and gets stronger as the pitch of the string-resonator gets higher, this

does not detract from the general perception of tuned resonance. In the

second section, however, when several differently tuned string-resonators play

simultaneously, the predominant sense is of a complex and diffused reverberance.

Perceptually,

this is not the effect that one might have expected, particularly given that

string-resonated chords are easily perceived as such when the component

frequencies are just one or two octaves higher, and, that even in

this register, single string-resonated notes are so clearly pitched.

Low-register string-resonated chords begin to approach a realistic reverberant

situation in which many different delay times are superposed.

The most salient feature of

both chords in the second section of Sound Example III.58 is that they provide

subtly differing resonances that might substitute for general, uncolored reverberation. Although it is difficult

to distinguish the specific harmony, it is clear that something about the intonation of each is different. Perhaps

successions of these discrete resonances could be used to subtly

approximate normal chord progressions.

Generally, a piece of music is

organized by the programmed control of its harmonic surface and substructure (cf., Schenker).21

The use of distinct colored resonances might provide a means for

establishing a complimentary, contradictory, or even an independent harmonic level coordinated with the musical performance.

Such a speculative application of the

string-resonator might provide a means by which a composer (or a program for

that matter) could enhance or obfuscate the clarity of the composition by underscoring

it with a specifiable and controllable harmonic reverberance.

A

String Resonator with Variable Excitation

To this

point the Karplus-Strong plucked-string algorithm has

been used strictly as

a resonator. However it is also possible to excite this resonator in a variety

of ways. Two different forms of excitation are demonstrated in this section.

The first results in a hybrid extension of

the string-resonator. The second (discussed in a different context by Jaffe

and Smith),22 is a form of what I call "sympathetic-speech." The design of fltdelay allows one to • simultaneously

excite the resonator with a speech

input and a noise burst (i.e., a pluck"). Even when an an

arbitrary input is specified, the noise is still

available, and its overall level can be controlled. A wide range of musical applications are accommodated by the

resulting combination of speaking and plucking as demonstrated in the

examples which follow.

Sound Example III.6A features

two different performances of an excerpt from an existing score which are played without pause between them. This score

includes a quick staccato opening and

a slower legato mid-section that offer a wide range of rhythmic/articulative

conditions under which the performance of the string-resonator can be

observed.

In the first version, the

excitation level is set to a constant value of .06. At this setting the level of the pseudo-pluck is

closely balanced with that of the string-resonated speech and the

rhythmic articulations are clarified, but not too artificially (i.e., the pseudo-pluck is present but not predominant). In

the second version the excitation level is controlled at the

note-statement level. Values range from .01 to .98.

The second

version demonstrates more clearly the range of articulative

complexes available

via this extension of the string-resonator. In this version, it is shown how

the parametric control of level can

provide a means of shaping the phrase structure by accenting certain

notes.

In order to simplify the coding

of "common-practice notation," as is the case for Sound Examples III.6A and B, a slightly different

score format is employed. The additional components of the score consist of

three macros and an instrument which plays rests. Of the three macros, one defines the tempo, another

calculates the duration of the notes as a fraction of the beat, and the third calculates the duration of the

rests as a fraction of the beat.

Sound Example III.6B features a

combination of speech and plucks on all three independent

parts of the previous contrapuntal score. Since all three use the same cmusic score and instrument format, they are

differentiated in several ways.

An

additional level of excitation control is used in this example. The attack of

the string-resonator

is smoothed by altering the onset of fltdelay.

In this specific case, the onset of the second and third voices is set to .01

which means that a 10 millisecond linear ramp is applied. The result of increasing the onset time of fltdelay is to soften the perceptual

pluck into something more like a "puff" of air.

The musical significance of

this excitation control might be considerable. In the last century, composers of acoustic music have become increasingly

interested in the structural

function of timbre (cf. Erickson). As a result, instrumental scores abound in

which timbre progressions or timbre modulations are themselves the

musical surface (e.g. Schoenberg's Five

Pieces for Orchestra #3, Ligeti's Lontano, and Crumb's Ancient Voices of Children).

A frequent technique has been to orchestrate the attack portion of a chord differently from the sustain portion. The

combination of varying classes of excitation with spoken words through

an extension of the string-resonator offers the composer a means of producing hybrid timbral

effects with considerable control over their balance and shading.

It is also possible to excite

one string-resonator by another, in which case one functions as a source resonator and, the other as a-sympathetic

resonator. There is no limit to the

number of such sympathetic resonators which can be incorporated in a single, complex string-resonator instrument. Furthermore, just as the pitch of

a simple string-resonator can be

arbitrarily tuned and dynamically altered, so too can the pitches of any number

of sympathetic resonators.

The model

for this behavior comes from multi-stringed acoustic instruments such as the piano or guitar. And, just as the piano gains a great deal of its spectral richness

from the free vibration of sympathetic strings, so too can the

string-resonator. In the present case, all partials of the source

resonator which do not coincide with those of the sympathetic resonators will be highly attenuated. Thus each sympathetic

resonator acts as a bank of very

narrow band-pass filters with center frequencies at

its partial frequencies.23 Sound

Example III.6C features a complex string-resonator instrument consisting of a

single string-resonated voice and

three sympathetically resonated voices.

To facilitate the independent

control of this phenomenon three instruments are employed. The first is a basic

string-resonator with an additional unit generator, shape, added to

control the envelope. The second instrument consists of three

sympathetically-resonated voices. It too employs the shape unit

generator for envelope control. The third instrument is responsible for summing

and assigning the appropriate output channel to both the the

string-resonated and sympathetically-resonated voices.

It is important to note that only the output instrument

contains an out unit generator. By not assigning the outputs of

"voice" and "sympathetic" within their respective instruments, they become accessible globally.

There are certainly musical applications for a system with this degree of integrated yet independent control. Most

important, a global design scheme, such as this, offers the means by which the

note-statement orientation of the cmusic

language can be circumvented.

Previously, all changes in the

settings of the instrument were made at the start-time of the note and were

determined by the settings of the various parameters. Now, with a global design, changes can affect an

instrument during a note rather than only at its outset. This

effectively models the cmusic orchestra on a real

orchestra in which instrumentalists perform their own parts, responding to all

its commands for execution, (i.e., phrase,

dynamic, and timbre indications), while simultaneously responding to the

general directions of the conductor.

The score

for example III.6C is in stereo. One channel contains the utput

of "voice,"

the other contains the output of the "sympathetic." The pitch of the source resonator

remains constant (87 Hz), while the sympathetically-resonated voices alternate

from a non-equal-tempered triad to an equal-tempered one.

Sound Example III.6D

demonstrates yet another global score model using sympathetic excitation. The score format has been simplified, by

assimilating the former's output instrument

into the sympathetic resonator instrument.

Whereas in the previous example, all three

sympathetically-resonated voices had the same

setting, in this one their finals and

respective timbres are slightly different.

In both

musical examples sympathetically-resonated voices significantly enrich the sonic character of the basic

string-resonator. Musical parallels for resonant techniques such as those demonstrated in examples III.6C and

D are also found in the contemporary literature.

For example, in Berio's Sequenza X for solo trumpet (1985), the trumpeter plays

into an opened piano while an onstage pianist silently depresses certain notes

and chords which effectively underscore the solo part with the desired harmonic

resonances. However, unlike those musical examples which employ

pianos as resonators, this particular

extension of the string-resonator allows for a continuous pitch shift of an

infinite number of sympathetically-resonated voices. The general musical

utility of the string-resonator is

substantially increased by an ability to alter and control its mode and degree of

excitation.

Generalized Resonators via Fast Convolution of Soundfiles

It was

shown that the Karplus-Strong plucked-string

algorithm provides the basis

for a simple yet powerful new technique for infusing speech with musical pitch.

The perceptual effect is akin to that of

speaking into the piano. But now, suppose that we wish to speak into

some other instrument. How can this new effect be obtained?

The

acoustical difference between the concert hall and the shower stall is that

each is

the manifestation of a slightly different filter. The pinnae

of the ear, the cavity of the mouth, the body of a violin, the wire connecting

speaker to amp, any medium through which a

musical signal passes can be considered a filter. And via the computer, it is

possible to mathematically simulate any filter, given the proper

description.

One means of implementing a

filter is via direct convolution. Convolution is a point-by-point mathematical

operation in which one function is effectively smeared by another. When an

arbitrary signal is convolved with the impulse response of a filter, the result

is the filtered output.

Traditionally, convolution has

been employed primarily to efficiently implement certain types of FIR filters. Thus, the required impulse response has

typically been obtained as the result of a standard filter-design

algorithm. In principle, however, any digital

signal can serve as the impulse response in the convolution process. It is

precisely this observation which constitutes the starting point for the

chapter to follow. Digital Filters

Linear

filtering is the operation of convolving a signal with a filter impulse response.24

Signals are represented in two fundamental domains, the time-domain and the frequency domain. Addition in one domain corresponds to

addition in the other domain. Thus, when two

waveforms are added, it is the same as adding their spectra. Multiplication in

one domain corresponds to convolution in the other domain. Thus, when two waveforms are multiplied, it is the same as convolving

their spectra. Similarly, when two spectra are multiplied, their

waveforms are convolved. Thus, since linear filtering is the operation of

convolving a signal x(n) with a filter impulse

response h(n), it is equivalent to multiplication in the spectral domain.

Convolution, like addition,

multiplication, subtraction, or division, is a point-by point operation which

can be performed on two digital waveforms. It is the process by which

successively delayed copies of x(n) are weighted by

successive values of h(n) and added together, effectively "smearing"

x(n) with h(n).

Thus, if h(n) = u(n) (i.e., the function of x(n) is convolved with a

single impulse), it is clear that x(n) remains unchanged. The

impulse is said to be an " identity" function with regard to

convolution because convolving any function with an impulse leaves that function unchanged. If the impulse is

scaled by a constant, the result is x(n) scaled

by the same constant. And if the impulse is shifted (i.e., delayed), then the

function is also delayed.

Convolution is a means to

implement a linear filter directly. Convolving a signal with a filter impulse

response gives the filtered output. That a filter is linear means that when two signals are added together and fed into

it, the output is the same as if each signal had been filtered separately and the outputs then added. together. In other words, the response of a linear system to a sum of signals is the sum of the

responses to each individual input signal.

Any

linear filter may be represented in the time domain by its impulse response. Any signal x(n)

may be represented in the time domain by its impulse response. Any signal x(n) may be regarded

as a superposition of

impulses at various amplitudes and arrival times

(i.e., each sample of x(n) is regarded as an impulse with amplitude x(n) and

delay of n).

By the superposition

principle

for linear filters, the filter output is simply the superposition of impulse responses, each having a scale factor and time shift

given by the amplitude and timeshift of the

corresponding impulse response.25

Each

impulse causes the filter to produce an impulse response. If another impulse arrives at the filter's input

before the first impulse response has died away, then the impulse response for both impulses is

superimposed (added together sample by sample). More generally, since the input is a linear combination of impulses,

the output is the same linear combination of impulse responses.26 Fast convolution, (the method

used by the CARL convolvesf program) takes advantage of the Fast Fourier Transform

(FFT) to reduce the

number of calculations necessary to do the convolution.

A

Conventional Application: Filtered Speech

The first set of sound examples, IV.OA

- C, demonstrates a conventional application of the CARL convolvesf

program. Three simple FIR filters are designed via a standard filter-design

program, and each, in turn, is applied to the same speech soundfile.

The filter-design program, fastfir, expects the user to: specify

the number of samples in the impulse

response, select one of four filter types (low pass, high pass, band pass, or

band reject), specify the window type (e.g., Hamming, Kaiser, etc.),

specify the amount of stop band attenuation, and specify the cutoff frequency.

All three filters in this series of examples

have impulse responses of 4096 samples, use Kaiser windows, and specify a 120dB

stop band attenuation at the band edges.

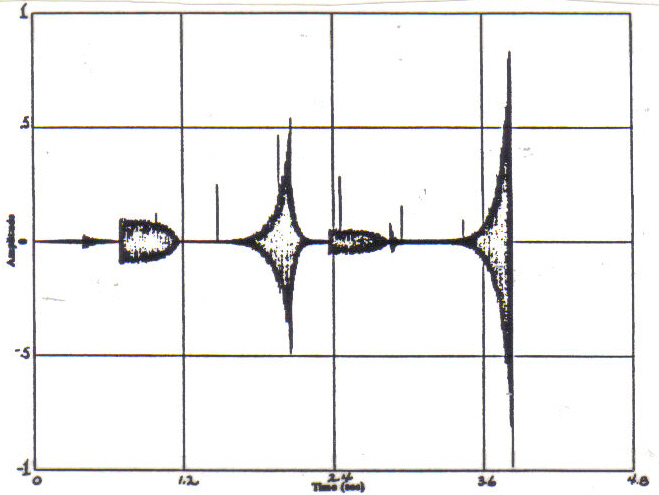

Sound Example IV.OA demonstrates the

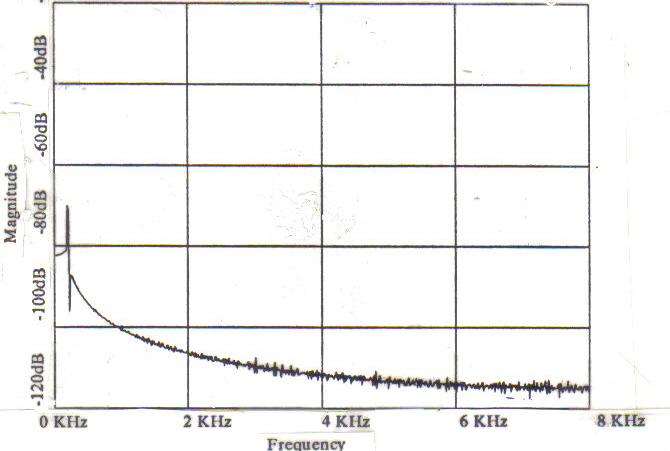

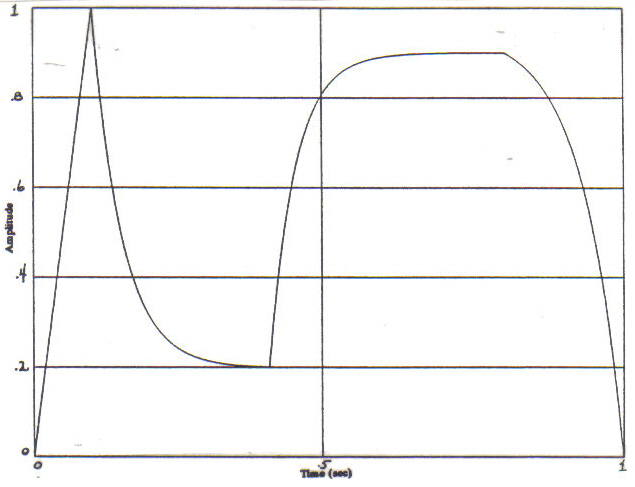

convolution of a speech soundfile with the impulse

response of a low pass filter with a cutoff frequency of 200Hz. Figure 1 is the

magnitude o 0 of

the Fourier transform of the filter's impulse response.

Figure 1: Magnitude of the FFT of the Impulse Response of

Filter IV.OA

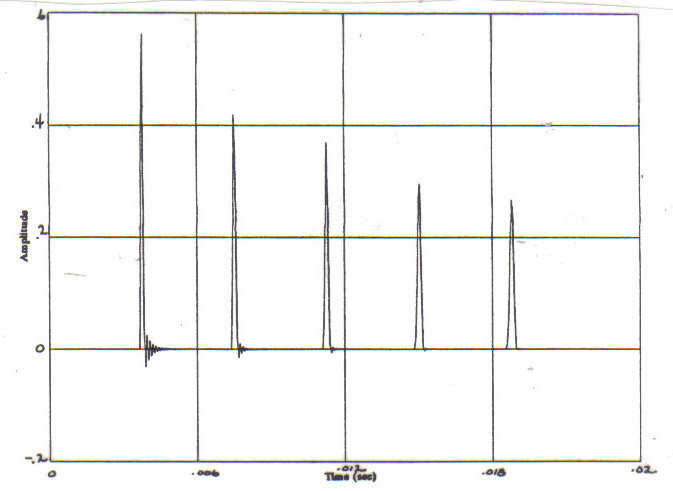

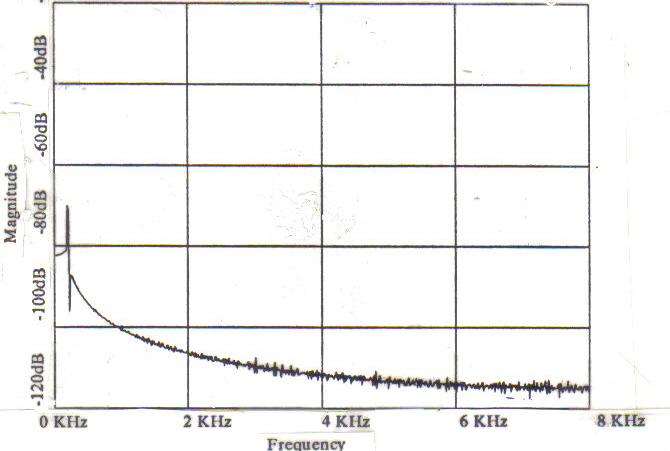

Sound Example IV.OB demonstrates the convolution of a

speech soundfile with the impulse response of a high pass filter with a cutoff frequency of 3kHz. Figure 2 is the magnitude of the Fourier

transform of the filter's impulse response.

Figure 2: Magnitude of the

FFT

of

the Impulse Response of Filter IV.OB

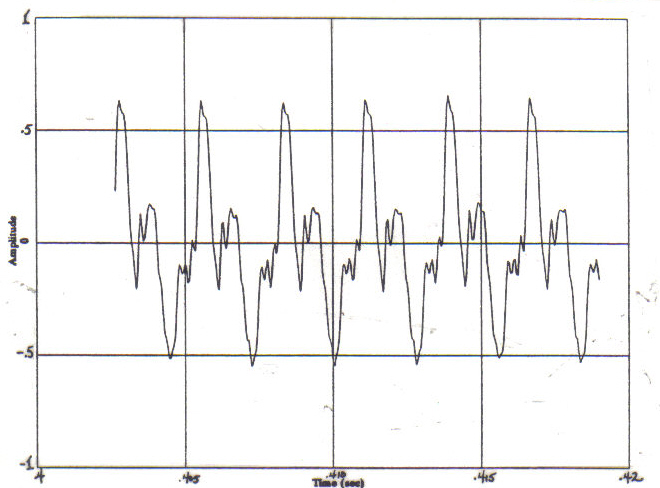

Sound Example IV.00 demonstrates the convolution of a speech soundfile

with the impulse response of a bandpass filter with a cutoff frequencies at 400 and 700Hz. Figure 3 is the

magnitude of the Fourier transform of the filter's impulse response.

Figure 3: Magnitude of the FFT of the Impulse Response of

Filter IV.00

There are numerous musical

applications of non-time-varying filters such as these. One

such subset comes under the general heading "equalization." In

recording engineer terminology, filters such as these provide control of the

"presence," "brilliance," "smoothness,"

"muddiness," "boominess," etc.,

of a recording or broadcast via simple large scale re-adjustment of its relative spectral levels. Another class of musical

applications comes under the general heading "noise

suppression." Simple filters as these have often been used to remove

unwanted "hiss" and "hum" from less than optimal location

and studio recordings.

As concerns the general focus of

this article (i.e., transforming speech into music), it

is clear that a wide variety of unique filters might be so designed and the

spoken text transformed via its convolution with their impulse responses.

However, what is more interesting and

significant are the musical possibilities of using impulse responses other than

those produced via standard filter-design programs.

A

New Application: Reverberation

To date, the only suggested musical

application for fast convolution (other than as an

efficient means of implementing FIR filters) has been as an unusual technique

for artificial reverberation. Via convolution, it is possible to generate the

ambiance of any room and to place any sound within that room. This is done by

convolving an arbitrary input with the

impulse response of the desired room. For example, if the desired musical goal

is to have one's violin piece played in Carnegie Hall, all

that is necessary is to convolve a digitized recording of the piece

with the digitized impulse response of Carnegie Hall. This is exactly what Moorer and a team of researchers from IRCAM were exploring when they made a "striking discovery-

regarding the simulation of natural-sounding room impulse responses.

Moorer

and his team collected the impulse responses from concert halls around the

world for study. While digitizing them, they "kept noticing that the

responses in the finest concert halls sounded remarkably similar to white noise

with an exponential envelope."27 To test their observation,

they generated synthetic impulse responses with exponential decays and then

convolved them with a variety of unreverberated

musical sources. Moorer

reported that the results were "astonishing" and suggested a number

of extensions to this technique. Ultimately, though, Moorer

dismissed this reverberation method because of the "enormous amount of

computation involved" (Moorer used direct convolution), and because of the fact that,

"even via fast convolution," real-time operation was

"still more than a factor of ten away for even the fastest commercially

available signal processors.- However, when the concern is

"musical potential" as opposed to "real-time potential," fast convolution of speech with exponentially

decaying white noise proves to be a rich source of new musical effects.

Sound Examples IV.1A and B

demonstrate the convolution of a speech soundfile with

two synthetic rooms consisting of single impulses followed by exponentially

decaying white noise. The only

difference between the two is the length of their "tails." The first is 1.9 seconds, and the second is 3.9

seconds. Both of these examples (IV.1A and B) confirm that a synthetic impulse response composed of exponentially

decaying white noise produces an extremely "natural sounding"

and "uncolored" "room." They also clearly demonstrate that

reverberation via fast convolution of a synthetic room response is a powerful tool for computer music. Obviously, a

"clean" and controllable reverberator such as this has numerous musical applications,

particularly in the area of record production.

Sound

Example IV.1C is the cmusic realization of another Moorer suggestion. He notes that

by "selectively filtering the impulse response before convolution, one could control the rolloff rates at

various frequencies" and thereby produce highly -colored

rooms.28

In this example speech is convolved with a synthetic room consisting of a

single impulse

followed by 2.9 seconds of exponentially decaying, band-limited white noise. In

the score the NOISEBAND argument has been

set to 3KHz, and the sound result is quite muted

- a -low-pass filtered" room.

Within

the cmusic software synthesis environment, it was

quite simple to design a model score which allowed the user to "tailor"

the room to a wide range of musical needs. This score

model provided software "knobs" for the direct specification of

DRYAMP (direct

signal), WETAMP (reverberated signal), NOISEBAND (i.e., tone), and DECAY-LENGTH. Admittedly, all four of these controls are

found on every moderately priced reverb

unit, and each is mentioned by Moorer as being

directly applicable in just this context. However, a musically

significant control included in the model score above, and to date, missing

from all analog and most digital reverberators (and

which seems to have been ignored by the Moorer team as well), allows the SLOPE of the decay to be

arbitrarily controlled. As it turns

out, this is the key to creating a whole new class of "spaces" -merely

by convolving sources with synthetic rooms which have other than exponential

slopes.

The

following two sound examples illustrate this new effect. Sound Example IV.1D

demonstrates the fast convolution of speech with a room response composed of logarithmically

decaying white noise. The resultant -space- is far less -roomlike- than it is -cloudlike.-

Thus, I call rooms such as these "cloud rooms."

Another unique class of

"rooms" results from the fast convolution of an arbitrary input with exponentially increasing white noise.

Sound Example IV.1E is such a room. In this

example, it is quite interesting how the spoken text is smeared by the process,

almost sounding as if it was played backwards. The room impulse response

is 3.9 seconds in duration, and the noise noise ramps up from silence to - 24dB below the level of the initial impulse.

I refer to rooms of this type as -inverse rooms.-

Sound

Examples IV.1A - E show how convolvesf and cmusic can be used to design and implementing a wide variety

of synthetic performance "spaces." The possibilities range from

extremely "natural sounding" rooms to some truly unique ones - rooms,

for example, in which the apparent

intensity of the source grows rather than fades. Although in the eyes of a recording engineer a highly

colored room might be quite undesirable, to a composer, it may provide just the necessary means by which certain sound

structures can be differentiated. An "uncolored" room response

is merely one point in a musically valid sound continuum.

Furthermore, the simple

addition of a decay-slope control to the standard set of reverberator controls has been shown to create a unique and wide ranging set of new

musical possibilities. In the case

of (logarithmically decaying) "cloud rooms" or (exponentially increasing)

"inverse rooms" the "basic" reverberator

is turned into a powerful new transformational tool.

A

New Way To Combine Musical Sounds: Generalized

Resonators

Clearly, a number of

significant new musical applications result from simply extending the current

use and understanding of convolution (e.g., "cloud rooms,"

and "inverse rooms"). In addition to this, however, the

convolution process can provide a totally new way to combine musical sounds.

In the case of filtering, the

impulse response of a desired filter is produced with the aid of a basic filter-design program. In the case of reverberation,

the impulse response of any

"room" can be produced by synthesizing noise of varying

"colors" and decay-slopes.

However these are not the only forms of digital signals which can serve as impulse

response. Via convolution, any sound can be thought of as a

"room" or "resonator." This has always been known by those

who have understood the convolution process, yet, to this point in time, the musical significance of this simple fact has

gone largely unexplored. (of what use is a filter which rattles like a tambourine to a

recording engineer who's main concern is the 60Hz hum in his control

room?)

But just as it is possible to

play one's violin piece in Carnegie Hall by convolving it with the hall's

impulse response, so too is it possible to play that same piece inside a

suspended cymbal by convolving it with the sound of a suspended cymbal. For, as

was noted in the introduction of this

article, the only difference between Carnegie Hall and the suspended

cymbal (besides the seating) is that, acoustically, they are manifestations of

two slightly different filters.

Convolution

provides a more general means by which speech can be infused with pitch. Since

it is possible, via convolution, to combine any two sounds, one need only find a sound with the desired pitch

and convolve it with speech. The speech can be infused with the pitch (and timbre) of the "found sound" because the

convolution of any two sounds

corresponds to their "dynamic spectral intersection.-

Thus, there is only energy in the

output at frequencies where both inputs have energy. Since the noise components

of speech have energy at all frequencies, the product of the spectral

intersection with a pitched "found sound" has a definite pitch.

Convolution is more generalized

than the -string-resonator." The Karplus-Strong plucked-string

algorithm is merely a difference equation which, due to the recursion relation, happens to efficiently

produce a musically interesting impulse response. But the resulting sound is always "string-like."

Convolution literally provides the composer/sound-designer

with an orchestra of pitch infusers, both harmonic and enharmonic.

Moreover, the filter (i.e., impulse response) can have musical meaning.

With

respect to real-time implementation, the advantage of the -string-resonator

is

that it is a one-step process and very computationally efficient. On the other

hand, convolution is a two step process:

(1) find or design the impulse response, (2) convolve it with the source.

Furthermore, convolution (even fast convolution) is very computationally

intensive. Also, in the case of fast convolution, there is an inherent

block-delay due to the fact that an entire FFT-buffer of input must be

collected before any output can be produced. Thus, the techniques which follow

are inherently non-realtime.

The musical model which most

closely resembles the use of the Karplus-Strong plucked-string algorithm as a resonator is that of

someone shouting into a piano with the sostenuto pedal

depressed. Convolution takes this musical practice several steps further. Via

convolution, it is possible to "speak from within" bassoons, violins,

cymbals, orchestras, and even other voices.

It is important to note that

the musical utility of convolution is not limited to finding the

"right" sounds. Actually, it is the combination of controllability

and variety which make convolution so

musically significant. Three possibilities exist as regards the "ideal resonator:" (1) find the

"right" sound, (2) modify the "found sound," or (3) synthesize the sound "right."

Any "found sound" can

be made into the -right- sound. Given a phase vocoder and a software

synthesis environment such as cmusic, this operation

is fairly straightforward. For

example, suppose the structural mandate of the compositional process requires

that a specific spoken word be infused with the timbre of an antique cymbal,

but the pitch of the cymbal is too high for an effective spectral

intersection. With the phase vocoder it is a simple

matter to independently time-compress or pitch-transpose the sound to the exact

frequency which is required.

It is

also a- simple matter to synthesize a sound with the spectral characteristics which satisfies the

compositional imperative (which is exactly how the reverberation examples were done). For example, if the

compositional necessity is to infuse speech with the sound of a plucked-string,

one can synthesize the sound of a plucked-string (possibly by using the Karplus-Strong plucked-string algorithm) and convolve the

speech with it. In fact it is actually possible to deduce whether a given

"found sound" is the "right" soundfor

a particular application by understanding the way in which the sound will

function as an impulse response.

There are

four aspects of the impulse response which offer the user direct control over the

characteristic of the resulting spectral intersection. These four aspects are, (1) the relative level of the

initial sample (2) the character of a single period, (3) the overall temporal

envelope, and (4) the extent to which the response is composed of discrete

perceptual events.

The relative level of the

initial impulse sample determines the amount of "direct" signal present in the output. Just as with the

reverberation examples demonstrated previously, the initial impulse sample is the means of controlling the

relative mix between the direct and "filtered" sound. It is a simple

matter to add a gain-adjusted, single impulse to the beginning of any

"found sound," and to thereby produce the desired balance between the

source and "resonance."

The

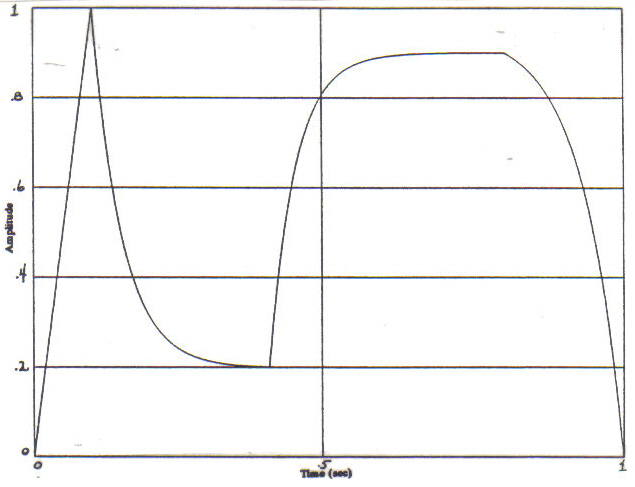

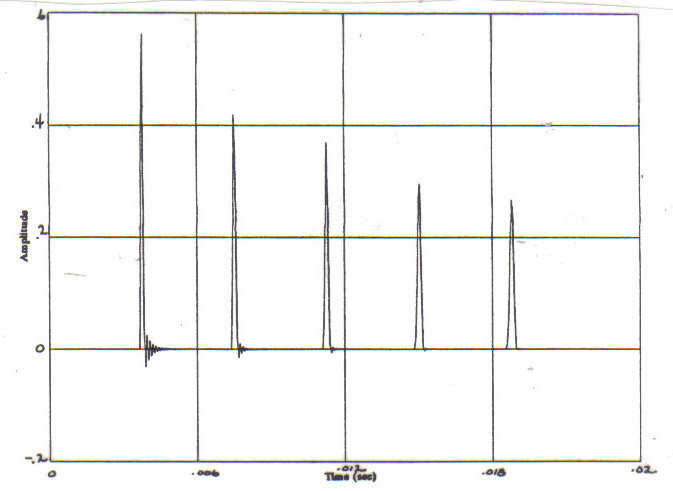

character of a single period (at least for pitched impulse responses) corresponds with the degree of

"reverberance." A pitched "found

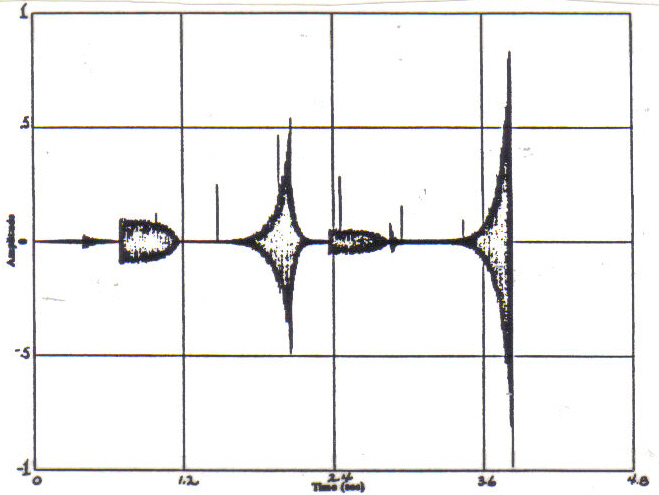

sound," with only an isolated peak per period (as shown in Figure 4) will

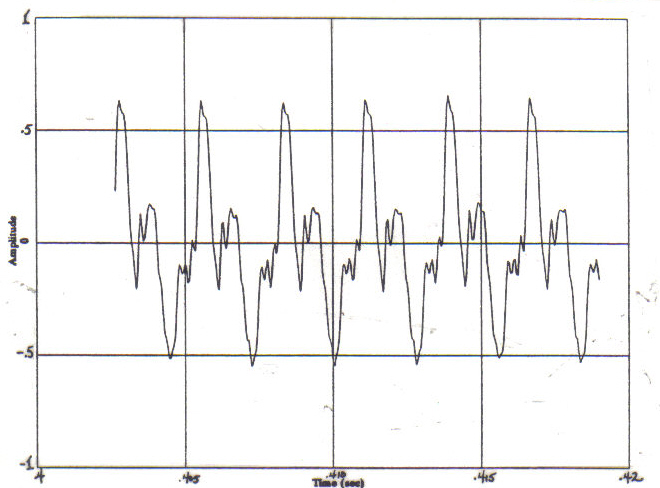

typically infuse the speech only with a specific pitch. On the other hand, a

sound (such as that in Figure 5) with many comparably-sized

peaks per period will both, infuse the speech with pitch, and also infuse the speech with other general attributes as well.

Typically, the two individual components (i.e., speech and pitched

"found sound"), retain more of their own identities. In this case, the speech is not merely infused with

pitch. Rather, the spectral intersection is more akin to speech coming

from within some "object" which is highly "colored" with a

certain pitch.

Figure

4: A sound with an isolated peak per period

Figure

5: A sound with many comparably sized peaks per period

Another

control over -reverberance- is

the time-domain envelope. "Found sounds" with exponentially

decaying time-domain envelopes will generally result in a reverberant quality.

Obviously, one can apply an exponential envelope to any "found sound" to control its relative "reverberance." From the macroscopic level, one can

shape a "found sound" by imposing any imaginable temporal

envelope to produce a variety of musical

results: from the microscopic level, one can effect a similar general

transformation by mixing in, or

filtering out, various amounts of noise (this is one musical situation where

a "noisy" source recording may be "better" than a quiet

one).

A different kind of control can

be obtained by "slowing down" the spectral evolution of the

"found sound" by time-expanding it via the phase vocoder.

In this way the impulse

response of a rapidly varying filter can be transformed into a slowly varying

one.

Lastly,

if the pitch of the impulse response is fixed, the degree of spectral intersection can be determined simply

by the duration of the impulse response. In general, the shorter the impulse response is, the wider the

spectral bandwidths of the resonant peaks are. In a hypothetical case

where the impulse response is restricted to being a strictly periodic impulse train, the bandwidth can be

directly related to the duration of the impulse response by the

following equation:

BW= 1/T

where

BW is the bandwidth of the spectral

peaks, and T is